A note on language: Artificial image tools and apps are a developing technology, and lots of terms have been used to describe images of child sexual abuse produced using it in child protection and policing. In this blog, we will refer to it as artificially generated child sexual abuse material.

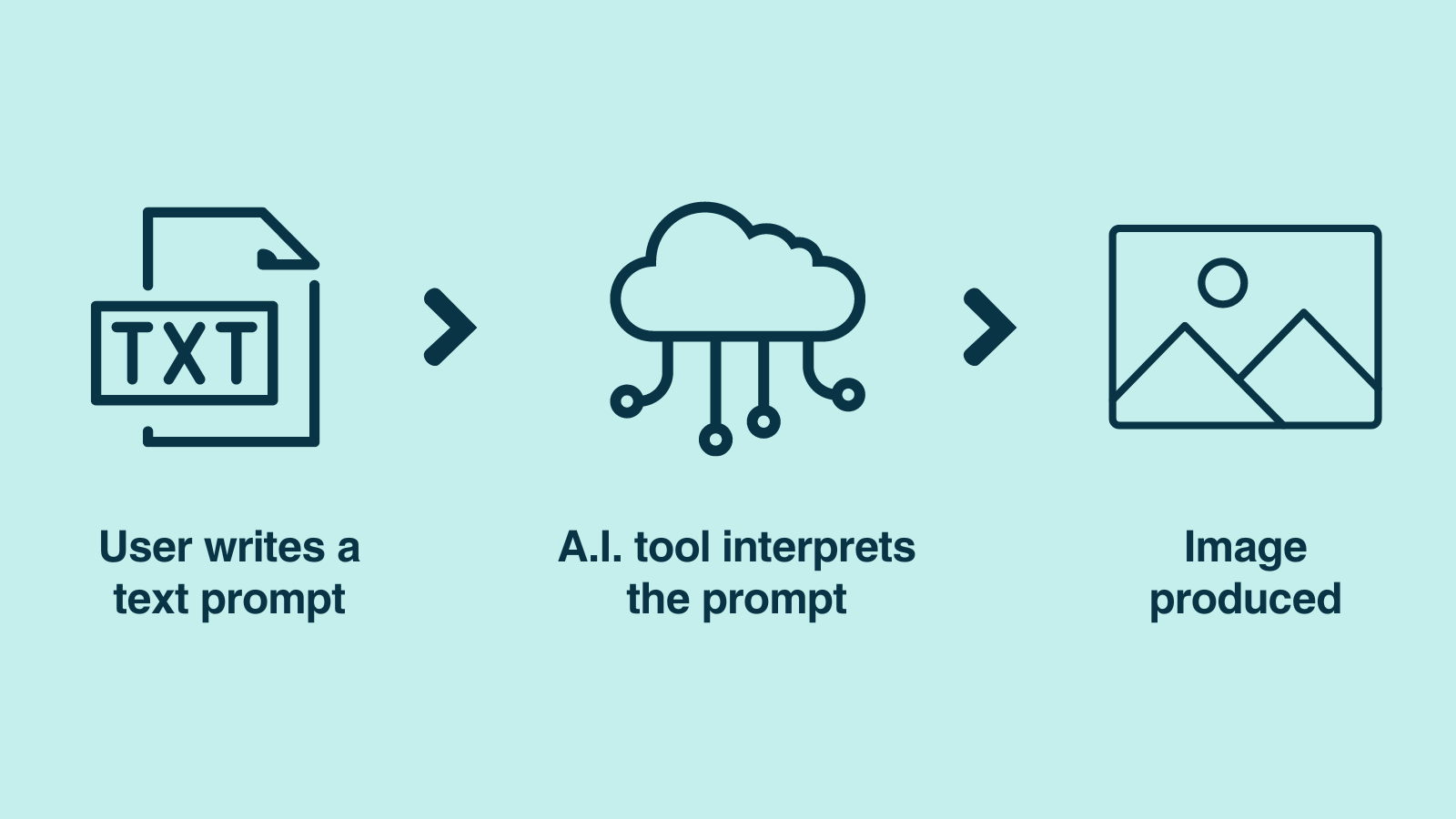

Artificial intelligence tools are developing at an astonishing speed. Not long ago, using artificial intelligence or ‘AI’ to create unique content at the click of a button sounded like science-fiction, but today anyone with access to the internet can produce convincing, lifelike images of almost anything using easily available apps and software. These ‘generative’ artificial intelligence tools can be downloaded or accessed online and used to turn a simple text prompt into new written, image and video content in a matter of moments. As with any new technology, this is likely to have a knock-on impact for all sorts of different professions and sectors, some of these impacts will be positive and others much more concerning.

How artificial intelligence tools produce images.

Artificial intelligence tools can support our lives in many positive ways, from writing the first draft of an email to even reviewing scans and test results in healthcare. But sadly, artificial intelligence tools are also already being used for harmful and illegal purposes – including in the creation of sexual imagery of children. In this short blog, we will explain what artificially generated child sexual abuse material is, its legal status, and how professionals can use their existing skills to support children and young people who come into contact with, or are affected by, artificially generated child sexual abuse material.

From the emerging evidence and conversations with colleagues from various organisations it’s clear that the scale of artificially generated child sexual abuse material is growing significantly. Last year, the Internet Watch Foundation identified nearly three thousand such images on just one website in the space of a month, and the National Crime Agency (NCA) has also identified the use of artificial intelligence tools in producing child sexual abuse material as a growing issue.

The contexts, harms and pathways to child sexual abuse are ever-changing, and these developments in artificial intelligence are likely to raise new concerns and questions. If you are a professional working directly with children and families, it’s important to remember that you already have the skills needed to effectively respond to concerns about child sexual abuse. You don’t need to become an expert in artificially generated child sexual abuse content, but it will help you to understand a bit about what it is and how to respond if you think an adult is viewing, making or sharing it, if a child’s image or likeness features in it or, if a child has potentially viewed or made these images themselves.

What is artificially generated child sexual abuse material?

Artificially generated child sexual abuse material describes images of child sexual abuse that are partially or entirely computer-generated. They are usually produced using software which converts a text description into an image. This technology is developing rapidly, the images created can now be very realistic, and recent examples are difficult to differentiate from unaltered photographs.

Many popular, publicly available artificial intelligence tools automatically block attempts to create abusive material, but the large number of child sexual abuse images made using them that have been detected show that individuals have found ways around this. Typically, they are made using publicly available artificial intelligence tools that can be used and manipulated to produce images, (and, increasingly, videos) depicting child sexual abuse.

Some artificially generated child sexual abuse material depicts children who appear to be entirely artificial or fictional, and it is often assumed this means no child has been harmed or affected. But most tools rely on thousands of existing images to inform or ‘train’ them, so genuine images of individuals are likely to have been used as reference material. We must remember it is fundamentally harmful to produce any child sexual abuse material, if it features an identifiable victim or not. Some reports indicate that photographs of child sexual abuse have also been used to ‘train’ artificial intelligence tools, so children may have been sexually abused to produce these images in the first place.

There are tools and methods that allow users to deliberately make images or videos that feature the likenesses of real people. Concerningly, this can allow someone to create sexual abuse images and videos with children and young people as the subjects without being present or having their knowledge or consent. Examples of this include the use of ‘deepfake’ tools, which can edit a child into existing explicit or abusive material, or apps which digitally remove the clothes of subjects in photographs.

For the most-part, these tools are widely available and require little specialist knowledge or technical skill to use. While it can be difficult to consider, there are examples of children and young people using them to create sexually abusive content featuring their peers. Last November, the UK Safer Internet Centre said it shouldn’t come as a surprise that this is now a feature in harmful sexual behaviour by young people, and that some “children may be exploring the potential of AI image-generators without fully appreciating the harm they may be causing.”

We still have a lot to learn from victims and survivors about the immediate and long-term impacts of having artificially generated abusive images made of them, but the negative impact of explicit and abusive images circulating online is significant regardless of whether or not the child was present in the context depicted. Existing research tells us that the impacts of child sexual abuse in all other online contexts vary widely, and can be severe and lifelong:

When images of a child have been shared, there is the potential for the child to be revictimised over and over again, every time an image is watched, sent or received. This impact can persist into adulthood, with victims/survivors reporting that they worry constantly about being recognised by a person who has viewed the material, and some have been recognised in this way.

Key messages from research on child sexual abuse by adults in online contexts

Is artificially generated child sexual abuse material illegal to create, view and share?

Yes, it is illegal to create, view and share all sexual images of children under-18 produced using artificial intelligence. It is important to remember this applies to all material depicting child sexual abuse – it doesn’t matter if the material is created in a ‘conventional’ way using a camera, or created using artificial intelligence tools.

As a result of the recent rapid growth of artificial intelligence tools, there is some broad confusion about the legality of artificially generated child sexual abuse material. A recent survey by the Lucy Faithfull Foundation found that 40% of respondents either didn’t know or thought that this content was legal in the UK.

Not only is it illegal, leaders in policing and law enforcement have warned that the proliferation of these images will likely make it even harder to identify children at risk of abuse. As artificially generated child sexual abuse images have become more realistic, it will likely be more difficult for police forces to be sure if a child is in need of identifying and safeguarding and to undertake that identification process.

How you should respond if you are concerned?

Research tells us that professionals feel less confident responding to child sexual abuse in online contexts because they aren’t ‘experts’ in technology. And when it comes to technology seen as new, complex, or unusual, that concern can be even greater. In reality, the skills professionals should use to respond are the same as in any case of child sexual abuse.

Although you may not be certain about how images have been created, or worry that you don’t have specialist knowledge in responding to harm in this context, this shouldn’t change the overall protection and support response that should be followed for any concern about child sexual abuse.

Below we briefly outline some of the different contexts of abuse where artificially generated child sexual abuse images may feature and set out key initial steps you should take in response. In all of these cases we recommend you refer concerns to children’s social care, and a multi-agency response involving policing colleagues should begin. All images of child sexual abuse, artificially generated or not, should also be reported to the Internet Watch Foundation.

Responding to children captured in artificially generated child sexual abuse material

Identifying children depicted in images of child sexual abuse is a job for specialist policing teams. However, many professionals will have a role in supporting children whose image or likeness features in child sexual abuse images online.

As a professional, your response may be different depending on the individual circumstances a child is in. But in all cases, you should refer concerns to children’s social care and/or the police if you’re concerned that a child or young person has been or is at risk of immediate harm at any point.

The impact on victims of the circulation of images of sexual abuse of children can be as severe and varied as the abuse itself. In cases where the images are partially or entirely artificially generated, it’s important for professionals to be there to listen to children and young people, ask them what happened, believe them and tell them how you can help.

Support for children and young people should always respond to their own individual situation and needs, which you can do regardless of the technology used to harm them.

Remember – by identifying child sexual abuse early and providing a supportive response, you can play a role in reducing long-term impacts.

Responding to adults who have created, viewed or shared artificially generated child sexual abuse material

When responding to adults found in possession of any form of child sexual abuse material, professionals should build their understanding of who has accessed this material and why, the risk they may pose to the children around them, and consider what safety plans should be put in place to keep everyone safe from harm.

Even where you suspect or an adult has told you the images were created using artificial intelligence, it is still important to undertake an assessment of risk and, if this has not happened already, report them to the police. Because we don’t have a clear risk framework specifically about artificial intelligence, or broader cases of online child sexual abuse, you should use the approaches you already use for assessing risk in cases of online sexual offending.

For example, where you have concerns about risk of harm to children within the family when a parent is arrested for artificially generated child sexual abuse images, remember to take a whole family approach to assessment of risk that considers all the relationships, vulnerabilities and strengths that exist within the family.

Our Managing risk and trauma after online sexual offending guide was created to help professionals safeguard the whole family when a parent or family member has accessed online sexual abuse material, and includes useful information you may want to consider in your assessment of risk.

Responding to children who may have viewed or created artificially generated child sexual abuse material

The digital world plays an increasingly significant role in children and young people’s day to day lives. They are likely to be early adopters of new technology and it is therefore not surprising that there are examples emerging where children and young people are seeing artificially generated child sexual abuse material but also using the technology to create sexual images of other children and young people.

When responding to concerns that children and young people have viewed, shared or made artificially generated sexual images of children and young people first and foremost, we must remember that you are working children and young people and apply the same considerations as we would in other cases of harmful sexual behaviour. First, refer them to children’s social care and/or the police if you’re concerned that a child has been or is at risk of immediate harm, and consider the impact on each child involved.

Many of the young people you will work with in these contexts will, with the right intervention at the right time, be unlikely to present a risk as adults. You may also meet some young people whose behaviour is particularly worrying, which may indicate they have experienced harm or abuse of some form themselves. Their needs must be addressed in your professional response too, including where relevant with the family.

Importantly, we need to think about the context of this activity, as a part of the current culture around young people’s activity online. Children’s involvement in artificially generated child sexual abuse material can be incredibly varied, from viewing and sharing it to actively producing material that features themselves, their peers or siblings. These different contexts require different responses, but they are all within your existing professional skillset.

Preparing yourself to respond

As a relatively new technology there are understandably a number of aspects of artificially generated child sexual abuse material we don’t fully understand yet, in terms of the specific motivations behind creating, viewing or sharing it, its individual impact, and how to prevent it. But even though these image tools are new, and wider research and literature may need time to catch up, there are a range of resources and guidance from the CSA Centre and other organisations to help you:

- Key messages from research papers on child sexual abuse in online contexts: Last year the CSA Centre released two new summaries of the latest and best research of child sexual abuse in online contexts more widely, which are helpful first steps to informing your practice with the latest research.

- Managing risk and trauma after online sexual offending: our guide giving advice for professionals on how to support families affected by online child sexual abuse offending.

- Communicating with children: our practice resource designed to give professionals the knowledge and confidence to speak to children about sexual abuse. It can also be helpful when speaking to children where you’re concerned artificially generated child sexual abuse images depicting them have been made.

- Safety planning in education: our guide providing practical support for education professionals to respond to children’s needs and safety when incidents of harmful sexual behaviour occur, and will be useful where there are concerns that artificially generated images are involved.

- Internet Watch Foundation (IWF): You can help remove all child sexual abuse material, including material that was produced using artificial intelligence, by reporting it to the site, app or network hosting it, and to the IWF. Under-18s can report images to Childline’s Report Remove

- Sharing nudes and semi-nudes: advice for education settings working with children and young people: This guidance for designated safeguarding leads offers advice on how to respond to incidents of nude image sharing by children and young people. A version for education settings in Wales is available here.

- Guide to Indecent Images of Children (IIOC) Legislation: If you would like to learn more about the law surrounding child sexual abuse material, the Marie Collins Foundation has written a summary of legislation in England and Wales.

- Shore: The Lucy Faithfull Foundation’s website Shore offers advice to under-18s on sexual behaviour their worried about, including lots of guidance on harmful content they might see online.

- If you have concerns about yourself, or someone else explore other helpful organisations on our Get Support page.